This article is about getting users involved early in the iterative test cycles, and it also discusses exploratory testing. The author also delves into the benefits of an incentive-driven, team competition approach to testing.

Getting users involved early in the iterative test cycles is discussed. Exploratory testing is discussed. The benefits of an incentive driven, team competition approach to testing is presented

Experienced project managers are familiar with the benefits of several best practices:

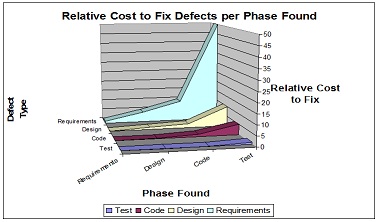

- Early User involvement in the SDLC provides valuable feedback to the requirements and design, as these issues are much more costly to repair if not found until the later project stages. Figure 1 illustrates the relative cost to repair defects as a function of the lifecycle phase in which the defect was found.

- Iterative Development cycles provide a productivity improvement in identifying and repairing development issues, as developers can be fixing issues found in a previous test cycle while the testers are verifying the next cycle. The cycles can be sized for optimum productivity, for example based on subsystem functionality or on the size of the teams.

- Exploratory testing, if completed by subject matter experts, can accomplish a good portion of the verification goals without spending significant schedule time on extensive test case planning and writing. The important aspect to keep in mind is that exploratory testing is not ad hoc. To be truly effective, you must use subject matter experts, and they must be provided a test charter such that:

- Detailed test outlines or scenarios are created and used as a guide during test execution. These must trace all functional requirements to verification activities, and must identify the test flow or schedule of tasks necessary to achieve verification.

- Test results logs must be kept so that issues can be recreated if necessary

- Defects must be adequately documented and reported

- Boundary values, functional paths, and associated parameters must be verified during multiple user iterations and variations of the test outline.

Note: Exploratory testing does not replace system verification, it complements it. Your system verification may require complete testing of system functions, performance, interfaces, etc, which may require engineering your test environments, test tools, and formal peer-reviewed test scripts written in advance. Some system requirements can only be verified by measurement, analysis, or inspection, and these may require additional non-exploratory test engineering approaches.

What is Competitive Exploratory Testing?

All three of the practices identified above can be brought together in what I call Competitive Exploratory Testing. The concept is simple. When provided incentives, people work hard. When workers are part of a team, they feel compelled to help the team succeed. If there are multiple teams competing with each other, with incentives to win or do well in a contest, the team productivity increases. The contest really heats up (and the fun begins) if the progress for each team is plainly visible for all the teams, as I will show in the example below.

The SSALSA Project

In 2002 I joined a large scale, client-server project in New Mexico. The objective of the project was to replace a mainframe financial system with a web based server system. The program is supported by over one thousand case workers in a dozen state field offices, where low income residents may apply for and receive various types of financial support, work programs, and training. The project was supported by a contracted development team and by 16 state employees (system Users). The state team was collocated in a large test lab with a workspace and workstation for each member. The team was provided instruction in the processes for creating test scenarios, running the tests, and documenting test results and defects. The project management team had set up an iterative development approach, and the state test team was receiving a build to test about every three weeks. Defects were being found and worked, but based on the amount of coders and development tasks, I suspected that there were many more undetected defects. At this point I devised the competitive team approach and the hot pepper contest. I presented the contest requirements and team approach to the team and they accepted it, some more eagerly than others. The less enthusiastic members changed their tone once the contest was underway. The testers divided themselves into four teams and came up with their team names (The Chipotle Champs, The Three Habaneras, etc). I setup a large wall space in the test lab to be used for monitoring the contest. We tacked a large white paper to the wall, and drew four horizontal rows, each row labeled with the name of a team. I found a red chili pepper icon on the web, sized it to fit onto an adhesive label, and printed the peppers on to many pages of self adhesive labels so I had a large reserve of peppers. Each team was promised one hot pepper for every valid defect found. When ever a defect was found, we pasted a hot pepper to the team’s section on the white board. Everyone could see how their team and every other team was performing. And everyone became enthusiastic about finding defects. I promised to provide team and individual awards for most peppers during a 3 week run on a significant build cycle. At the end of this test period the board was plastered with peppers... you should have seen them test! In their exploratory mode, they tested their outlined scenarios with sunny day paths, rainy day paths, all the boundary conditions and error conditions, etc. In this competitive mode they were truly on the hunt for defects. I also observed that the normally less productive testers became more productive as they tried to help their team succeed. The awards provided were pairs of movie passes and $10 dinner coupons for the winning team members, plus everyone received a chili pepper coffee mug and a chili pepper bandana. The team had a great time and the project found over 200 defects (many critical) for this significant build. Subsequent to this contest, we observed continued productivity momentum as the staff continued to work together in teams.

Your Competitive Exploratory Testing Plan

- ind the subject matter experts (test engineers plus end-users) available to support the activities.

- Stage the team into a large room with sufficient workstations.

- Provide process training for the (non-test engineer) users: Scenario writing, test logging, defect reporting.

- Divide the staff into self-organized, equal sized test teams.

- Stage the test progress whiteboard with clear visibility to the teams.

- If collocation is not possible, use the web for a virtual white board.

- Devise the contest around the parameters of the project, or the location of the team, or on some common denominator that the team can relate to such as sports teams.

- Update the status whiteboard three or four times per day so the teams see their output in real time. Make a visible commotion over the success of a person or team. Keep it fun.

- t award time, everyone gets an award. Discuss the importance of finding defects early and often. Thank them for a job well done.

* Assurance Technology Symposium, NASA IV&V Facility, 5 June 2003.