Joanne Perold writes that you cannot just look at the numbers; the context behind the data is often far more valuable. Metrics can tell a compelling story or provide meaningful information to anyone who wants to pay attention, but when the focus is only on the number, it can be a disaster.

Metrics have their place and can be extremely useful—but they can also drive dysfunction when used in the wrong way. The minute you assign a number to something, some people begin to focus more on the number itself and not on the story it’s telling or the information it’s providing. In my experience, you cannot just look at the numbers; the context behind the data is often far more valuable. Metrics can tell a compelling story or provide meaningful information to anyone who wants to pay attention, but when the focus is only on the number, it can be a disaster.

Velocity as a measure

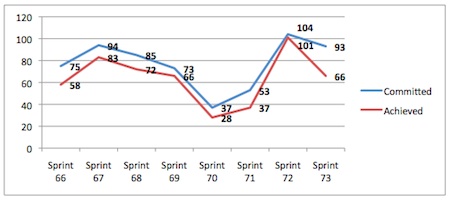

I had a team that consistently had a fluctuating velocity. Over a period of eight sprints the team was not even vaguely consistent. The product owner found planning difficult. I decided to have a look at the details and keep track of the information behind the numbers. The team was consistently losing and gaining members due to contractors moving for visa renewals and requirements and people going on leave. The team size was never consistent, so how could their velocity possibly be? This was not an entirely useful number for the team as a standalone. It was, however, very useful in determining that communication was a huge issue when team members were abroad. It was a starting point to try to solve the problem.

Figure 1. Team velocity over the eight sprints.

Another problem the team had was a distinct lack of direction. The backlog was inefficiently groomed and prioritized, and occasionally it was almost non-existent. You can see the effect this had in the above graph in sprints 70 and 71, which were particularly bad. Looking at the patterns revealed valuable information about the lack of direction. The lack of a well-groomed backlog and not enough direction were having an impact on what the team committed to. Obersving the patterns reinforced the understanding. When this was taken to the team members, they put practices in place to ensure more visibility and encourage better stories and prioritization from the product owner.

Being exposed to this information was invaluable for the team. It helped members ask questions about what was happening and what they needed to do better going forward. The numbers themselves were fairly worthless, but the patterns were hugely insightful.

How does velocity become dysfunctional?

I have heard managers give demands like, “The team needs to double its velocity in n sprints.” What I do not understand is how anyone can fail to see the dysfunctional behavior waiting to happen when a team is tasked with such a mandate. The behavior I have observed frequently in this instance is the team inflating their story points per story. Every three becomes a five and every five becomes an eight. The only thing this achieves is turning an insightful metric into a number that adds little or no value. The most unfortunate thing is that this can affect trust—both the trust that the team feels from management and the trust that the team has in management. In his business management book The Five Dysfunctions of a Team, Patrick Lencioni wrote that the lowest tier of a team is the absence of trust. In my experience, trivializing metrics to achieve a manager’s demanding goal can start a vicious dysfunctional circle, with lack of trust at the core.

Bug information as a big picture

Information on bugs can be very useful for both testers and teams. Having information on the health of a product includes how many bugs it has and which of those the testers and product owners feel are non-negotiable for launch. Relating the number of new bugs in a sprint to a team might mean that in the sprints with more bugs, the team was pushing too hard to finish quickly, and quality slipped. By having a look at this information, the team can analyze the kinds of bugs and the numbers of bugs to decide whether the quality level versus the speed level is a bad thing. If this ratio is something the team, testers, and product owner are happy with, then all is good. If not, then a new negotiation needs to take place.

How long, on average, it takes a critical bug fix to go live can also be valuable information for a team. If it is really difficult and takes a long time, causing the team to release the last fifteen features and regression test for two weeks before they can release, then maybe there is a problem. If teams have this information and understand its impact, then they can work on solving the problem.

Knowing the overall health of the build or product is far more useful than knowing that you have ten unresolved bugs. Those ten bugs could be symptoms of something serious within the system, or they could be just minor text and UI changes that need to be implemented.

What’s wrong with bug counts?

The other day I was speaking to the product owner of an interesting project. He was complaining about the number of bugs logged that were essentially duplicates. We spoke for a bit about the kinds of bugs he was seeing, and I asked how the testers were measured. The testers were measured on bug counts. The metric had encouraged behavior that put the focus on completely the wrong things. The testers were concentrating on easy-to-find, visible UI bugs that could be logged separately per screen or per event to keep their counts up. They were not focusing at all on getting information about the product health as a whole because those tests take time and patience and intricate thinking to invent and run. And it’s those tests that provide valuable information if they yield any issues. When used as a measure in this way, the metric was totally driving the wrong behavior.

The meaning in the metrics

Metrics come in all shapes: velocity; bug counts; code coverage; cycle time; live releases per sprint, week, or month; etc. These can all be valuable providers of information to the team to create visibility about unknown things. If the focus of the metric is only on how much or how many or how often, then I believe the value for the team becomes less. The wrong behaviors are rewarded and encouraged, and far too often, trust levels are affected. In my experience it is far more useful—and far less dysfunctional—to pay attention to the meaning and the nuances rather than just the numbers.

User Comments

The testing literature warns against using bug counts to assess testers. Here's another way that bug counting can give a mistaken impression. Many years ago I worked in a shop with a weak tester. This person was not let go by the company for a long time because they were assigned to test part of the code that was very poorly written. Though the tester was unskillful, the person could not help but find a large number of bugs. Later, a new director was hired who ended the practice of using bug counts to rate tester ability.