In his CM: the Next Generation series, Joe Farah gives us a glimpse into the trends that CM experts will need to tackle and master based upon industry trends and future technology challenges.

Build management covers a lot of ground because in software development, builds are fairly significant events that touch nearly the entire product team. Let's look first at the operations of defining and identifying builds before moving on to the topics of comparing builds and of build automation.

Defining a Build

There are different ways to define what is in a build. Some do this by tagging every file revisions with the build ID. This can be tedious or, if automated, resource-intensive. Some define a build by defining a baseline. A baseline, though, is meant to be a change reference. Creating a new baseline definition every time a build has to be tracked sort of defeats the purpose of having a baseline reference. It can also be resource-intensive.

I like to define a build by starting with a pre-existing baseline reference, and adding many changes to it. I also like to define a build by starting with a previous build and adding changes to it. Either of these options work well for me. In the first case, baseline references can be defined according to what best suits the CM process. The build clearly defines what has changed since the baseline and does so in terms of change packages (as opposed to file revisions). In this case, build = baseline + set of changes.

The second case comes from more of a realization that builds are done serially, adding changes each day or so to do the next build. In this latter case, build = previous-build + set-of-changes.

This latter definition is actually preferable, as the build/previous build relationships form a tree that show how builds evolve. This gives a history of a build. By combining this with a decent query/navigation facility in a next generation CM tool, you can quickly navigate through the changes that have gone into recent builds.

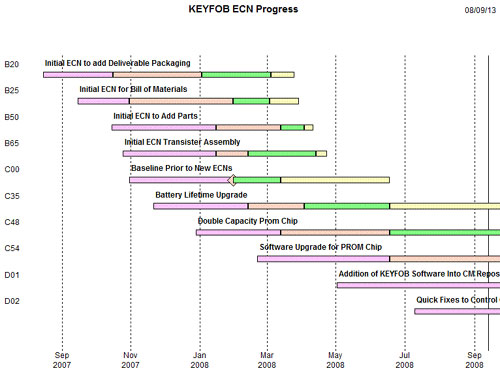

First Order Build Object, With an Identity

A build needs to be a first order object in a CM tool. A tag just doesn't cut it. The build will go through promotion levels. Significant milestones for the build will be tracked and it should be possible to add additional attributes against the build besides the baseline it's built upon, the previous build and the set of changes. For example, a description of why the build was created, the build results, perhaps a distinguishing title, the product it applies to, and the release stream would all normally be tracked against a build record. Call it a "build" a "build record" or and "ECN" (this is an Engineering Change Notice), to best suit your process environment. I like to refer to it as a "build notice" or “ECN” prior to creating the build, while a notice or what's going into the build is being created, and then as a "build" in the past tense, once the build has been created. Below is an example gantt-like chart showing the progression of successive builds as they reach the various milestones, or states, along their useful lifetime.

The build should have a state flow associated with it. It should go from a planned state, to a notice definition state, to a built state, and then on to various test and production states such as: sanity tested (stested), integration tested (itested), verification tested (vtested), alpha test, beta test, production. Obviously not all builds are going to go through all of these states. Most will wind up at a "stested" or "itested" state.

This first order build object must have a clear and unambiguous name associated with it, whether automatically generated by the CM tool or manually selected by the build/CM team. The name must be integrated into the actual build so that the build is self-identifying. It must also be used for identifying the target of verification test sessions. And it must be possible, at all times with the CM tool, to select by build name a viewing context which exactly matches the build contents.

Ideally, the build identifier would be attached to any problem report raised against the product. The build identifier is a central component of traceability, because each build, and the set of operations performed with it, encapsulate a huge amount of information. What tests have been run against it? What problems have been fixed? Where is the build deployed? What source revisions are used to create it? What new features are incorporated into it?

Builds as the Key Point of Comparison

In some cases, these questions are really questions of comparison: What problems have been fixed since the previous build? What problems have been fixed since the build that we're currently using at our site? Except, perhaps, for source deltas that are generally performed at an update/ change package level, or sometimes at a file level, builds are one of the most natural points of comparison. One reason is that when a build breaks, you want to compare it to what went into the last (successful) build. Another is that a customer always wants to know what new features/fixes they receive by moving to a new release (i.e., at a specific build level). Another is that the comparison is usually at a more macro and meaningful level than, say, source code or file revisions, although there should be no reason why you can't do a source delta between two builds. More often, though, one is concerned with the list of changes, problems, features, test results, etc.

Build comparison helps to focus initial integration testing on the problems fixed and features introduced. It helps to trace down build problems by allowing a quick look at the newly introduced changes, hopefully with a drill down capability so that suspicious changes can be directly inspected. It helps to generate release notes. But all of this assumes there is appropriate traceability between the builds and changes, and the changes and their driving requests.

Build Automation

I've seen companies that perform system builds every weekend. I've seen others that perform hundreds of builds every night. In both cases automation is important, not only because it reduces effort, but even more so because it helps to eliminate human error. Build automation requires a number of components to be in place: the build tools, the build platforms, the build dependencies and the build definition.

One aspect of build automation deals with retrieving and executing Make files or other build Scripts. However, some tools support the generation of these Make files and build scripts. In this case, both the developer and build manager have their workload simplified as they don't have to manually maintain these scripts. In some cases, build automation must deal with distributing the build function across multiple machines and differing platforms. Normally, it is the build tools themselves that help to control this distribution function, and so from the CM/ALM tool perspective, the question becomes more one of integration with build tools.

Deployment

When we talk about deployment there are many different aspects that can apply to any given environment. These include:

- Deploying software to the build machine(s) for the build operation

- Deploying web pages to a web server, and potentially multiple versions of the web pages

- Deploying built software on the set of machines (e.g., for in-house operations)

- Automatically allowing uploading of new software from a central site (pulling)

- Deploying files for building packages that are to be used for downloading software

- Delivering download packages to download servers.

- The ability to create incremental pac

When we look at the management of these deployment operations, we can start to identify the types of capabilities the CM solution must support. These general fall into 2 basic categories:

1) Structured retrieval of files from the CM tool: Whether it's for doing builds or otherwise, the CM tool must give the user the flexibility to deploy files in the right places. Ideally, this is an automated process that does not require any complex scripting, other than a few "retrieval" commands. A good tool will let you specify what needs to be deployed. Ideally you can specify the build identifier for builds. For other items, you should be able to specify CM logical directories and where they are to be mapped to in the real file system. Better tools will let you select what's being deployed based on a set of deployment options (e.g., English/French/Spanish, promotion level, data configuration, etc.).

One of the more advanced CM capabilities allows objects within the CM tool to be visible directly from the operating system. For example, both IBM's ClearCase and, more recently, Neuma's CM+ (VFS option) allow you to specify a set of context views and have those context views appear as directory trees in the file system, typically at different mount points. This does not necessarily simplify the deployment process dramatically, but does eliminate one step, though often with some performance hit. If the deployment is for packaging purposes, the performance is likely negligible. If it's for a build operation, though, performance hits can be somewhat significant. One of the more immediate benefits of this virtual file system feature is that it does let you deploy, for example, a web site at one release level, and then modify the deployment simply by changing the context view parameters, or even just through promotion of the software updates/change packages. In some cases, the views may even be conditional on the user accessing them. This provides a great deal of flexibility without the need to actually do any deployment. Instead, the views are set up appropriately and changes to the deployment can be automatically reflected by the views. Now this will only work in situations where the target of the deployment is within the reach of the CM tool itself. But this can be extended to the packaging process, whereby the packaging can be repeated without having to re-deploy files.

2) Third party tool interactions: Deployment will, in some cases, have to deal with packaging. Typcially, this is packaging of software for download by the target users, but it may also refer to packaging for the purpose of writing to CD or DVD. There are many packaging technologies that deal with installation issues, security issues, and even upgrade issues. Selecting the right packaging tools is as important as having good CM tools that can adequately be integrated with the packaging tools.

The CM tool should be able to invoke other tools, signal to other tools the completion/readiness of operations, and export data in the structural formats required by the tools. In some cases, the CM tool should be able to monitor deployments, and perhaps calculate differences between existing and proposed deployments. Often a deployment package is readied and a special file is used to signal to the download targets that the new package is available.

Deployment Management

Many of the deployment issues are really outside of the scope of CM, and are more application specific. Although CM tools can help, a fair bit of the deployment customization is very application specific. For this reason, there are limited deployment capabilities that can appear in CM/ALM tools.

However, as with most management processes and data, the CM tool should be able to help track the states of deployments. By extending the ALM functions to track deployment sites and their states (i.e. what revisions of software/data do they currently have deployed), CM tools can add a generic capability that is often difficult to manage or is relegated to CRM applications. Deployment site tracking might take the form of a customer tracking system, or of a server tracking system. Flexibility in the ability to extend the ALM tool without a lot of effort is a key capability that is visible in some tools already, notably the Canadian tools: MKS and CM+.

This level of deployment management is crucial to managing support teams. If customers/ servers/whatever can be upgraded to the most recent releases/deployments, support calls and expertise requirements become much more uniform. Having the ability to identify stragglers (using old releases) and to focus on moving them forward will ultimately benefit the support teams and the bottom line.

Overall, my preference is to deal with build management through strong tool capabilities, and to deal with deployment management in a case-by-case basis using scripting in cooperation to the data management capabilities of a CM tool. When I see a tool that allows me to scroll through products and then to scroll through builds within a given product stream, while also presenting me with all of the traceability information, my interest grows. This is the beginning of a new way of looking at CM: instead of having to dig out information, it is all just there. I only have to name to the product and stream and scroll through until I see what I'm looking for. This is a far cry from the days when a build was simply a tag on a set of file revisions.