This article focuses on a methodology adopted during a requirements and functional specification phase of a project. The chosen model for requirements engineering was founded on a combination of six sigma techniques and a set of best practices adopted from within the organization.

The whole requirements engineering process might seem daunting at first considering the uncertainties and unknowns involved, but the trick is to adopt a process that fits your need and is recognizable and repeatable across your domain. Even though it's often ignored or done in a hurry, the requirement management phase is easily the most important phase in your development lifecycle. Why is requirements management so important?

- Requirements traceability to design and code unaccomplished

- Requirements churn and leads to scope creep

- Modules don't integrate

- Code is hard to maintain

- Poor performance under load

- Build-and-release issues

- Ultimately, user or business needs are not met

Problem Statement/Our Project Scenario

The client wanted to roll out his next-generation, factory-automation equipment ahead of a competitor. This called for enhancing features in the existing product, making changes to the controller configuration, and releasing a product that was superior in performance and efficiency. The challenges for the software team included adapting to ever-changing customer priorities, trying to hit on a development model that would fit the bill, implementing an effective change management process, and coming up with a test strategy and infrastructure to ensure as smooth a high-quality release as our committed schedules would allow.

The Methodology

The SDLC model chosen was a combination of the iterative and V-models. Even though we had chosen our model, we had to wait for the requirements, which arrived late and incomplete. We assumed it would be futile to wait for all the requirements to come in and then start planning for testing, validation, and verification activities.

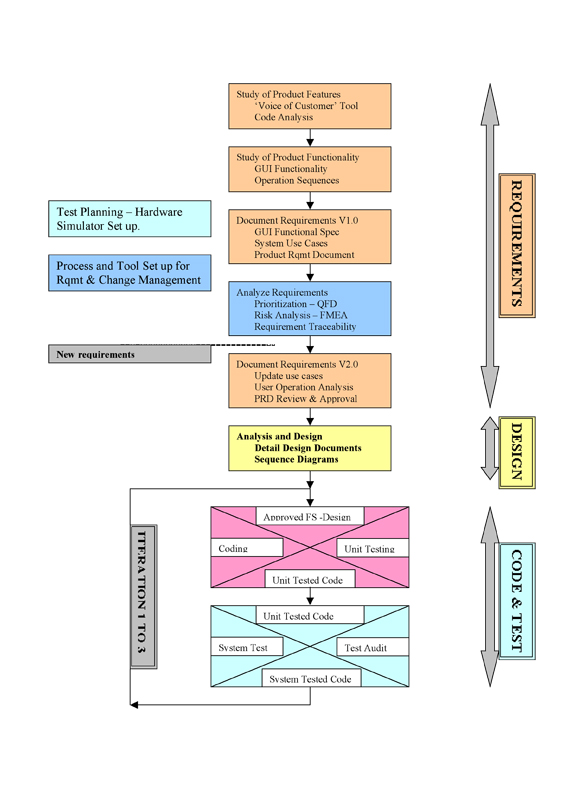

Development started on tasks such as high-level design, non-functional requirements analysis, risk analysis, and code analysis. Thus, we were prepared by the time the enhancement requests for the new product were delivered by the customer. The hybrid model adopted called for just the RS phase and an incremental iterative implementation cycle for the coding and testing phase of the V-model, as shown in figure 1.

|

| Figure 1: SDLC phases and activities. |

This article focuses on the methodology adopted in the requirements and functional specification phases. The chosen model for requirements engineering was founded on a set of ten best practices adopted from within the organization:

Best Practice 1: Study of product features

Best Practice 2: Detailed analysis of product features

Best Practice 3: Functionality analysis

Best Practice 4: Code analysis (parallel bottom-up approach)

Best Practice 5: Product requirement document (PRD)

Best Practice 6: Sequence diagrams and detailed design documents

Best Practice 7: Quality standards compliance

Best Practice 8: Unified change management process and tools

Best Practice 9: Hardware soft simulators

Best Practice 10: Six Sigma methodology

We first started with the study of product features activity. The objective was to study the features offered by existing product variants so we could compile a list of anticipated features in the target product. In addition, this exercise also provided the onsite and offshore teams an opportunity to identify with the target machine to be built based on existing feature inventory. The deliverable that came out of this activity was the G-Spec document (generic specification).

Then we completed a detailed analysis of product features. The primary objectives of this exercise were to expand each feature in detail to the design specification level based on the customer's input. The Voice of the Customer (VOC) a proven six sigma tool was adopted for this purpose in which the interviews with requirement providers focused on a Who, What, Why, When, Where, and How questioning style. This exercise also revealed gaps in our understanding of the product and helped improve knowledge in the industrial automation domain. The deliverable from this phase of the project was an updated VOC document.

Afterward, we started the functionality analysis activity, in which the major functionalities were broken down into sub-functionalities and operational scenarios were analyzed. Use cases were derived from this sub-functionalities document, and the system use case specification document was created. The requirements-in the form of product understanding, business functions, feature specifications, external interfaces (software, hardware, UI, and communication), operational sequences, use cases, and non-functional requirements-were captured in the PRD. This was further refined as more information and requirements became available.

Around the same time, a parallel activity began wherein a core team of senior developers began looking through the source code to understand the logical flow as well as the layout in terms of classes and function calls. The customer had shared the source code of the current system that it intended to upgrade. This code analysis activity helped to bring forth a picture of the machine that was hitherto not clear to everybody. As a result of the code analysis and operational sequences study, the team could now create UML sequences diagrams using Rational Rose and detailed design documents. Using a traceability matrix, these sequence diagram IDs were then traced to class names or code segments to ensure bi-directional traceability.

We couldn't do all this without an eye for quality. Requirements were captured following the quality standards compliance requirements guidelines stated by the ISO standards, viz ISO 9126, EN 50128, and IEC 60158. During the requirements specification phase, a few of the ISO 50128 standard techniques were considered after a detailed analysis of the sixty-eight-odd recommended techniques. The ISO 50128 standard techniques we used are listed below:

- Cause consequence diagrams (B.6)-fault tree analysis (FTA)

- Data flow diagrams (B.12)

- Hazard and operability study (B.34)

- Impact analysis (B.35)

- Performance requirements (B.46)

- Prototyping /animation (B.49)

After a detailed review of relevant Six Sigma tools that the project could leverage, it was decided to use VOC, quality function deployment (QFD), failure mode and effect analysis (FMEA), requirements analysis matrix (RAM), orthogonal array (OA), cause and effect analysis (C-E), design structure matrix (DSM), and Pugh matrix.

Requirements management posed a challenge as we were never sure in what form the requirements would finally emerge from the customer design team. To preempt that risk, the project team defined its own demand specification template and got the customer to provide requirements in the prescribed format. The change impact analysis process was clearly defined and communicated to the customer as well as to the project team. High-level steps included:

1. Get requirements in the demand specification template

2. Expand the demand statement using VOC analysis (who, what, when, where, why, and how)

3. Analyze engineering impacts

a. The "how's" yield clues around impacted features

b. Using the QFD, identify associated functionality and design parameters (These design parameters were the engineering items that were scrutinized

to determine their impact.)

4. Review meeting to analyze if a problem requires only a hardware/machine tweak or if it requires a software change

5. Analyze software impacts

a. Using the QFD, identify all the functionalities (use cases) that are impacted

b. For each use case, analyze GUI and controller change required using a detailed functional traceability mapping (referred to as the X-Y map)

c. Conduct risk analysis based on FMEA so the potential regressive damage due to the change is minimized

6. Create the change request impact analysis document and review with customer

7. On approval, implement the change. Also, make the necessary modifications to related artifacts: PRD, UML diagrams, QFD, G-Spec, etc.

Testable requirements are a key ingredient to a project's success, especially when the product you're developing exists at an offshore location, as in our case. How do you test a product that most of the team of developers and testers haven't even seen except in a training video and pictures? The product has numerous motor controls, more than 300 sensors and Input /Outputs and measures approximately thirteen feet wide and stands at more than a meter in height. For us, building a hardware simulator seemed like the most logical solution. Benefits of the hardware simulator approach as envisaged by the project leadership were:

- Infrastructure and resources, such as physical space and skilled testers, are often scarce. A simulated machine takes up no more space than a regular PC and can be operated offshore by a small team of skilled software engineers.

- Cost of procuring and conducting testing using a full-fledged, hardware trial machine can be prohibitive. This type of testing should be reserved for the final pre-production launch or acceptance testing phase.

- Using a trial machine for integration and system testing is inefficient and adversely impacts the schedule.

- Due to the high costs of such industrial automation machines, dedicating even a unit for full-time testing may not be feasible for many vendors.

What seemed like an overwhelming, uphill task at the beginning of the project was accomplished with relatively high confidence thanks to a robust process model that was adopted for the requirements phase. The methodology, though fraught with risks about not being able to elicit all required information before design and coding, used a top-down approach that proved to be extremely practical when analyzed in hindsight. The repeatability and structure that this hybrid model based on Six Sigma and organizational best practices brought in to our requirements gathering initiative, is unmatched from what we have seen during the course of this project's execution.

The views expressed in this article are that of the author. Wipro Technologies does not subscribe to the substance, veracity, or truthfulness of the said opinion.